The HAPI FHIR Global Atlas is inspired by our friends at the OpenMRS project. We would love to add your project, company, or organization to the map. Learn more about how you can add yourself (or update/remove an existing entry).

HAPI FHIR 6.2.0 (Vishwa) ›

Welcome to the winter release of HAPI FHIR! Support has been added for FHIR R4B (4.3.0). See the R4B Documentation for more information on what this means. Now onto the rest!

Breaking Changes

- The

ActionRequestDetailsclass has been dropped (it has been deprecated since HAPI FHIR 4.0.0). This class was used as a parameter to theSERVER_INCOMING_REQUEST_PRE_HANDLEDinterceptor pointcut, but can be replaced in any existing client code withRequestDetails. This change also removes an undocumented behaviour where the JPA server internally invoked theSERVER_INCOMING_REQUEST_PRE_HANDLEDa second time from within various processing methods. This behaviour caused performance problems for some interceptors (e.g.SearchNarrowingInterceptor) and no longer offers any benefit so it is being removed. - Previously when ValueSets were pre-expanded after loinc terminology upload, expansion was failing with an exception for each ValueSet

with more than 10,000 properties. This problem has been fixed.

This fix changed some freetext mappings (definitions about how resources are freetext indexed) for terminology resources, which requires

reindexing those resources. To do this use the

reindex-terminologycommand." - Removed Flyway database migration engine. The migration table still tracks successful and failed migrations to determine which migrations need to be run at startup. Database migrations no longer need to run differently when using an older database version.

- The interceptor system has now deprecated the concept of ThreadLocal interceptors. This feature was added for an anticipated use case, but has never seen any real use that we are aware of and removing it should provide a minor performance improvement to the interceptor registry.

Security Changes

- Upon hitting a subscription delivery failure, we currently log the failing payload which could be considered PHI. Resource content is no longer written to logs on subscription failure.

General Client/Server/Parser Changes

- Previously, Celsius and Fahrenheit temperature quantities were not normalized. This is now fixed. This change requires reindexing of resources containing Celsius or Fahrenheit temperature quantities.

- Fixed bug where searching with a target resource parameter (Coverage:payor:Patient) as value to an _include parameter would fail with a 500 response.

- Previously, DELETE request type is not supported for any operations. DELETE is now supported, and is enabled for operation $export-poll-status to allow cancellation of jobs

- Previously, when a client would provide a requestId within the source uri of a Meta.source, the provided requestId would get discarded and replaced by an id generated by the system. This has been corrected

- In the JPA server, when a resource is being updated, the response will now include any tags or security labels which were not present in the request but were carried forward from the previous version of the resource.

- Previously, if the Endpoint Base URL is set to something different from the default value, the URL that export-poll-status returned is incorrect. After correcting the export-poll-status URL, the binary file URL returned is also incorrect. This error has also been fixed and the URLs that are returned from $export and $export-poll-status will not contain the extra path from 'Fixed Value for Endpoint Base URL'.

- Previously, the

:nicknamequalifier only worked with the predefinednameandgivenSearchParameters. This has been fixed and now the:nicknamequalifier can be used with any string SearchParameters. - Previously, when executing a '[base]/_history' search, '_since' and '_at' shared the same behaviour. When a user searched for the date between the records' updated date with '_at', the record of '_at' time was not returned. This has been corrected. '_since' query parameter works as it previously did, and the '_at' query parameter returns the record of '_at' time.

- Previously, creating a DSTU3 SearchParameter with an expression that does not start with a resource type would throw an error. This has been corrected.

- There was a bug in content-type negotiation when reading Binary resources. Previously, when a client requested a Binary resource and with an

Acceptheader that matched thecontentTypeof the stored resource, the server would return an XML representation of the Binary resource. This has been fixed, and a request with a matchingAcceptheader will receive the stored binary data directly as the requested content type. - A new built-in server interceptor called the InteractionBlockingInterceptor has been added. This interceptor allows individual operations to be included/excluded from a RestfulServer's exported capabilities.

- The OpenApi generator now allows additional CSS customization for the Swagger UI page, as well as the option to disable resource type pages.

- Modified BinaryAccessProvider to use a safer method of checking the contents of an input stream. Thanks to @ttntrifork for the fix!

- Fixed issue where adding a sort parameter to a query would return an incomplete result set.

- Added new attribute for the @Operation annotation to define the operation's canonical URL. This canonical URL value will populate the operation definition in the CapabilityStatement resource.

- A new interceptor pointcut

STORAGE_TRANSACTION_PROCESSINGhas been added. Hooks for this pointcut can examine and modify FHIR transaction bundles being processed by the JPA server before processing starts. - In the JPA server, when deleting a resource the associated tags were previously deleted even though

the FHIR specification states that tags are independent of FHIR versioning. After this fix, resource tags

and security labels will remain when a resource is deleted. They can be fetched using the

$metaoperation against the deleted resource, and will remain if the resource is brought back in a subsequent update. - Fixed a bug which caused a failure when combining a Consent Interceptor with version conversion via the

Acceptheader.

Bulk Export

- Previously, bulk export for Group type with _typeFilter did not apply the filter if it was for the patients, and returned all members of the group. This has now been fixed, and the filter will apply.

- A regression was introduced in 6.1.0 which caused bulk export jobs to not default to the correct output format when the

_outputFormatparameter was omitted. This behaviour has been fixed, and if omitted, will now default to the only legal valueapplication/fhir+ndjson. - Fixed a bug in Group Bulk Export where the server would crash in oracle due to too many clauses.

- Fixed a Group Bulk Export bug which was causing it to fail to return resources due to an incorrect search.

- Fixed a Group Bulk Export bug in which the group members would not be expanded correctly.

- Fixed a bug in Group Bulk Export: If a group member was part of multiple groups , it was causing other groups to be included during Group Bulk Export, if the Group resource type was specified. Now, when doing an export on a specific group, and you elect to return Group resources, only the called Group will be returned, regardless of cross-membership.

- Previously, Patient Bulk Export only supported endpoint [fhir base]/Patient/$export, which exports all patients.

Now, Patient Export can be done at the instance level, following this format:

[fhir base]/Patient/[id]/$export, which will export only the records for one patient. Additionally, added support for thepatientparameter in Patient Bulk Export, which is another way to get the records of only one patient. - Fixed the

$poll-export-statusendpoint so that when a job is complete, this endpoint now correctly includes therequestandrequiresAccessTokenattributes. - Fixed a bug where /Patient/$export would fail if _type=Patient parameter was not included.

- Previously, Group Bulk Export did not support the inclusion of resources referenced in the resources in the patient compartment. This is now supported.

- A previous fix resulted in Bulk Export files containing mixed resource types, which is not allowed in the bulk data access IG. This has been corrected.

- A previous fix resulted in Bulk Export files containing duplicate resources, which is not allowed in the bulk data access IG. This has been corrected.

- Previously, the number of resources per binary file in bulk export was a static 1000. This is now configurable by a new JpaStorageSettings property called 'setBulkExportFileMaximumCapacity()', and the default value is 1000 resources per file.

- By default, if the

$exportoperation receives a request that is identical to one that has been recently processed, it will attempt to reuse the batch job from the former request. A new configuration parameter has been - introduced that disables this behavior and forces a new batch job on every call.

- Bulk Group export was failing to export Patient resources when Client ID mode was set to: ANY. This has been fixed

- Previously, Bulk Export jobs were always reused, even if completed. Now, jobs are only reused if an identical job is already running, and has not yet completed or failed.

Other Operations

- Extend $member-match to validate matched patient against family name and birthdate

$mdm-submitcan now be run as a batch job, which will return a job ID, and can be polled for status. This can be accomplished by sending aPrefer: respond-asyncheader with the request.- Previously, if the

$reindexoperation failed with aResourceVersionConflictExceptionthe related

batch job would fail. This has been corrected by adding 10 retry attempts for transactions that have failed with aResourceVersionConflictExceptionduring the$reindexoperation. In addition, theResourceIdListStepwas submitting one more resource than expected (i.e. 1001 records processed during a$reindexoperation if only 1000Resourceswere in the database). This has been corrected.

Command-line tool changes :

- Added a new optional parameter to the

upload-terminologyoperation of the HAPI-FHIR command-line tool. You can pass the-sor--sizeparameter to specify the maximum size that will be transmitted to the server, before a local file reference is used. This parameter can be filled in using human-readable format, for example:upload-terminology -s \"1GB\"will permit zip files up to 1 gigabyte, and anything larger than that would default to using a local file reference. - Previously, using the

import-csv-to-conceptmapcommand in the command-line tool successfully created ConceptMap resources without aConceptMap.statuselement, which is against the FHIR specification. This has been fixed by adding a required option for status for the command. - Added support for -l parameter for providing a local validation profile in the HAPI FHIR command-line tool.

- Previously, when the upload-terminology command was used to upload a terminology file with endpoint validation enabled, a validation error occurred due to a missing file content type. This has been fixed by specifying the file content type of the uploaded file.

- For SNOMED CT, upload-terminology now supports both Canadian and International edition's file names for the SCT Description File

- Documentation was added for

reindex-terminologycommand.

JPA Server General Changes

- Changed Minimum Size (bytes) in FHIR Binary Storage of the persistence module from an integer to a long. This will permit larger binaries.

- Previously, if a FullTextSearchSvcImpl was defined, but was disabled via configuration, there could be data loss when reindexing due to transaction rollbacks. This has been corrected. Thanks to @dyoung-work for the fix!

- Fixed a bug where the $everything operation on Patient instances and the Patient type was not correctly propagating the transactional semantics. This was causing callers to not be in a transactional context.

- Previously when updating a phonetic search parameter, any existing resource will not have its search parameter String updated upon reindex if the normalized String is the same letter as under the old algorithm (ex JN to JAN). Searching on the new normalized String was failing to return results. This has been corrected.

- Previously, when creating a

DocumentReferencewith anAttachmentcontaining a URL over 254 characters an error was thrown. This has been corrected and now anAttachmentURL can be up to 500 characters.

JPA Server Performance Changes

- Initial page loading has been optimized to reduce the number of prefetched resources. This should improve the speed of initial search queries in many cases.

- Cascading deletes don't work correctly if multiple threads initiate a delete at the same time. Either the resource won't be found or there will be a collision on inserting the new version. This changes fixes the problem by better handling these conditions to either ignore an already deleted resource or to keep retrying in a new inner transaction.

- When using SearchNarrowingInterceptor, FHIR batch operations with a large number of conditional create/update entries exhibited very slow performance due to an unnecessary nested loop. This has been corrected.

- When using ForcedOffsetSearchModeInterceptor, any synchronous searches initiated programmatically (i.e. through the internal java API, not the REST API) will not be modified. This prevents issues when a java call requests a synchronous search larger than the default offset search page size

- Previously, when using _offset, the queries will result in short pages, and repeats results on different pages. This has now been fixed.

- Processing for

_includeand_revincludeparameters in the JPA server has been streamlined, which should improve performance on systems where includes are heavily used.

Database-specific Changes

- Database migration steps were failing with Oracle 19C. This has been fixed by allowing the database engine to skip dropping non-existent indexes.

Terminology Server, Fulltext Search, and Validation Changes

- With Elasticsearch configured, including terminology, an exception was raised while expanding a ValueSet with more than 10,000 concepts. This has now been fixed.

- Previously when ValueSets were pre-expanded after loinc terminology upload, expansion was failing with an exception for each ValueSet

with more than 10,000 properties. This problem has been fixed.

This fix changed some freetext mappings (definitions about how resources are freetext indexed) for terminology resources, which requires

reindexing those resources. To do this use the

reindex-terminologycommand." - Added support for AWS OpenSearch to Fulltext Search. If an AWS Region is configured, HAPI-FHIR will assume you intend to connect to an AWS-managed OpenSearch instance, and will use Amazon's DefaultAwsCredentialsProviderChain to authenticate against it. If both username and password are provided, HAPI-FHIR will attempt to use them as a static credentials provider.

- Search for strings with

:textqualifier was not performing advanced search. This has been corrected. - LOINC terminology upload process was enhanced to consider 24 additional properties which were defined in loinc.csv file but not uploaded.

- LOINC terminology upload process was enhanced by loading

MAP_TOproperties defined in MapTo.csv input file to TermConcept(s).

MDM (Master Data Management)

- MDM messages were using the resource id as a message key when it should be using the EID as a partition hash key. This could lead to duplicate golden resources on systems using Kafka as a message broker.

$mdm-submitcan now be run as a batch job, which will return a job ID, and can be polled for status. This can be accomplished by sending aPrefer: respond-asyncheader with the request.

Batch Framework

- Fast-tracking batch jobs that produced only one chunk has been rewritten to use Quartz triggerJob. This will ensure that at most one thread is updating job status at a time. Also jobs that had FAILED, ERRORED, or been CANCELLED could be accidentally set back to IN_PROGRESS; this has been corrected

- All Spring Batch dependencies and services have been removed. Async processing has fully migrated to Batch 2.

- In HAPI-FHIR 6.1.0, a regression was introduced into bulk export causing exports beyond the first one to fail in strange ways. This has been corrected.

- A remove method has been added to the Batch2 job registry. This will allow for dynamic job registration in the future.

- Batch2 jobs were incorrectly prevented from transitioning from ERRORED to COMPLETE status.

Package Registry

- Provided the ability to have the NPM package installer skip installing a package if it is already installed and matches the version requested. This can be controlled by

the

reloadExistingattribute in PackageInstallationSpec. It defaults totrue, which is the existing behaviour. Thanks to Craig McClendon (@XcrigX) for the contribution!

By: james

Demonstration/Test Page

A public test server can be found at http://hapi.fhir.org. This server is built entirely using components of HAPI-FHIR and demonstrates all of its capabilities. This server is also entirely open source. You can host your own copy by following instructions on our JPA Server documentation.

Commercial Support

Commercial support for HAPI FHIR is available through Smile CDR.

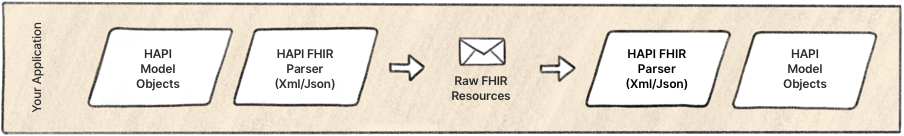

Use the HAPI FHIR parser and encoder to convert between FHIR and your application's data model.

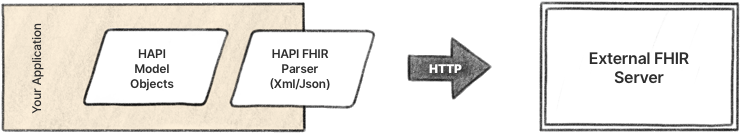

Use the HAPI FHIR client in an application to fetch from or store resources to an external server.

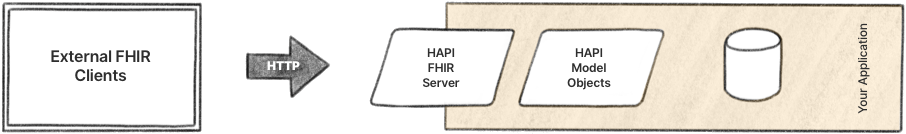

Use the HAPI FHIR server in an application to allow external applications to access or modify your application's data.

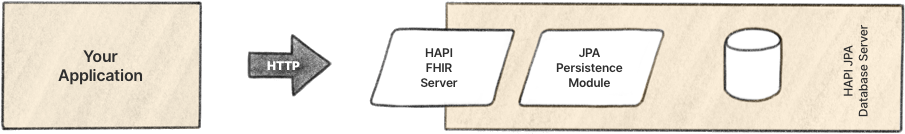

Use the HAPI JPA/Database Server to deploy a fully functional FHIR server you can develop applications against.

A Free and Open Source Global Good:

A Free and Open Source Global Good: